|

OpenGL ES SDK for Android

ARM Developer Center

|

|

OpenGL ES SDK for Android

ARM Developer Center

|

This document describes the related samples "ETCAtlasAlpha", "ETCCompressedAlpha", and "ETCUncompressedAlpha", which illustrate three different ways of handling alpha channels when using ETC1 compression.

The source for this sample can be found in the folders of the SDK.

This document is a guide to getting the most out of your partially transparent texture maps when developing software for the Mali GPU, by using hardware texture compression.

Just as the compression on a JPEG image allows more images to fit on a disk, texture compression allows more textures to fit inside graphics hardware, which on mobile platforms is particularly important. The Mali GPU has built in hardware texture decompression, allowing the texture to remain compressed in graphics hardware and decompress the required samples on the fly. On the Mali Developer site there is a texture compression tool for compressing textures into the format recognised by the Mali GPU, the Ericsson Texture Compression format.

Ericsson Texture Compression version 1 or ETC1 is an open standard supported by Khronos and widely used on mobile platforms. It is a lossy algorithm designed for perceptive quality, based on the fact that the human eye is more responsive to changes in luminance than chrominance.

One minor problem with this standard however is that textures compressed in the ETC1 format lose any alpha channel information, and can have no transparent areas. As there are quite a few clever things that can be done using alpha channels in textures, this has led many developers to use other texture compression algorithms, many of which are proprietary formats with limited hardware support.

This document and associated code samples show several methods of getting transparency into textures compressed with the ETC1 standard.

The Mali Developer code samples already contain an example of loading an ETC1 texture and associated prescaled mipmaps, so for clarity the snippets for these methods, and the full examples provided to illustrate a full implementation, are based on that simple ETC1 loading example.

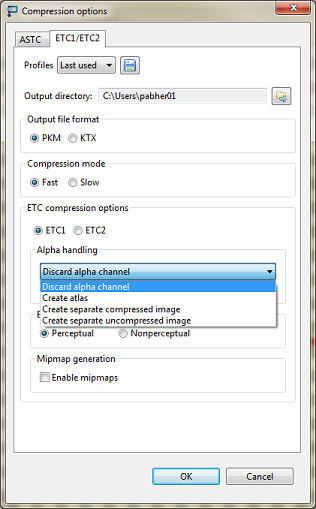

The first step in any of these methods is extracting the alpha channel from your textures. Since the alpha channel is not packed in the compressed texture, it has to be delivered alongside it. The alpha channel can be extracted with most graphics programs, but since performing that task would be quite arduous this functionality is integrated into the ARM Mali Texture Compression Tool (from version 3.0). Whether, and how, the alpha channel is extracted may be selected by choosing an Alpha handling option the Compression Options dialog. This is shown in Figure 1.

The Texture Compression Command Line Tool also supports extracting the alpha channel. For full information about using these options see the Texture Compression Tool User Guide.

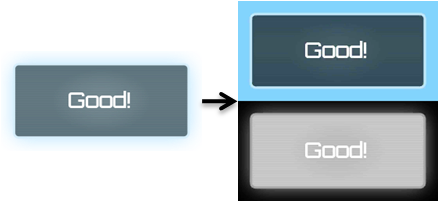

The alpha channel is converted to a visible greyscale image, which is then concatenated onto the original texture, making the texture graphic taller.

To create compressed images suitable for use with this method select "Create atlas" in the Texture Compression Tool.

The sample "ETCAtlasAlpha" reads an image using this method.

This is the easiest method to implement as when the texture atlas image has been compressed, the only change required in your code is a remapping of texture coordinates in the shader, such that:

Becomes:

This scales texture coordinates to use the top half and then shifts that down to the bottom half of the image for a second sample where the alpha channel is. This example uses the red channel of the image mask to set the alpha channel.

More practically, you could instead add a second varying value to your vertex shader.

Making your vertex shader look like this:

And your fragment shader can then use the two varying coordinates:

This uses a little more bandwidth for the extra varying vec2, but makes better use of pipelining, particularly as most developers tend to do more work in their fragment shaders than their vertex shaders. With these minor changes most applications should run just fine with the texture atlas files.

However, there are cases when you might want to maintain the ability to wrap a texture over a large area. For that there are the other two methods, discussed below.

The alpha channel is delivered as a second packed texture, both textures are then combined in the shader code.

To create compressed images suitable for use with this method select "Create separate compressed image" in the Texture Compression Tool.

The sample "ETCCompressedAlpha" reads an image using this method.

When loading the second texture be sure to call glActiveTexture(GL_TEXTURE1) before glBindTexture and glCompressedTexImage2D in order to ensure that the alpha channel is allocated in a different hardware texture slot.

When setting your shader uniform variables, you will need to allocate a second texture sampler and bind it to the second texture unit:

Then inside your fragment shader, once again merge the two samples, this time from different textures:

The alpha channel is provided as a raw 8 bit single-channel image, combined with the texture data in the shader.

To create compressed images suitable for use with this method select "Create separate compressed image" in the Texture Compression Tool.

Depending what other options are selected this will produce either single a PGM file, or a PGM file for each mipmap level, or a single KTX file containing all mipmap levels as uncompressed data. PGM format is described in http://netpbm.sourceforge.net/doc/pgm.html. KTX format is described in http://www.khronos.org/opengles/sdk/tools/KTX/file_format_spec/.

You will need to implement a new method to load and bind this texture, but given the uncompressed nature of the texture loading is fairly trivial:

The sample "ETCCompressedAlpha" reads an image using this method.

Allowing the textures to be loaded into separate active textures like before:

And once again loading them separately into the fragment shader:

Then inside your fragment shader merge the two samples, again from the two different textures: