|

OpenGL ES SDK for Android

ARM Developer Center

|

|

OpenGL ES SDK for Android

ARM Developer Center

|

This document describes usage of compressed ASTC textures.

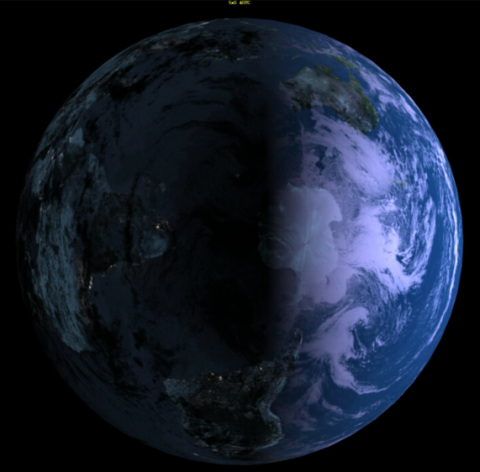

This tutorial shows how Adaptive Scalable Texture Compression (ASTC) can be easily used in simple scene, which is a textured sphere spinning in 3D space. It simulates an earth globe lighted from one side with a camera zooming in and out on its surface.

The ASTC algorithm represents a group of lossy block-based compressed texture image formats. For information on why texture compression is needed see chapter Why use texture compression? Two and three dimensional textures may be encoded using low or heigh dynamic range. In this demonstrating example we take under consideration only 2D, LDR textures. Basic concept of encoding relies on division the compressed image into a number of blocks with a uniform size. Each block is stored with a fixed 16-bytes footprint, regardless of the block's dimensions. That's why it can represent a varying number of texels and bit rate is determined by block size (see below table), which allows content developers to fine-tune the tradeoff of space against quality.

| Block size | Bit rate |

|---|---|

| 4x4 | 8.00 bpp |

| 5x4 | 6.40 bpp |

| 5x5 | 5.12 bpp |

| 6x5 | 4.27 bpp |

| 6x6 | 3.56 bpp |

| 8x5 | 3.20 bpp |

| 8x6 | 2.67 bpp |

| 8x8 | 2.00 bpp |

| 10x5 | 2.56 bpp |

| 10x6 | 2.13 bpp |

| 10x8 | 1.60 bpp |

| 10x10 | 1.28 bpp |

| 12x10 | 1.07 bpp |

| 12x12 | 0.89 bpp |

ASTC offers also support for 1 to 4 color channels, together with modes for uncorrelated channels for use in mask textures and normal maps. Decoding one texel requires data from a single block only, which highly simplifies cache design, reduces bandwidth and improves encoder throughput. Despite this, ASTC achieves peak signal-to-noise ratios (PSNR) better than or comparable to existing texture compression algorithms (e.g. ETC1/ETC2).

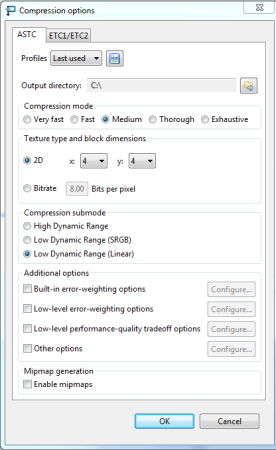

You can preview and analyse your texture image how it looks like after decompression using Texture Compression Tool (TCT):

You can compress your texture image to ASTC format using command line interface:

In this sample sphere is covered with color that is result of combining colors from three texture units: cloud and gloss unit, day time unit and night time unit. Hence, firstly all compressed ASTC textures have to be decoded and specified for targets in order to generate texture bindings that will be next bound to particular texture units. For doing it load_texture() function is responsible, which follows the steps:

Finally, glCompressedTexImage2D is invoked.

After a new texture ID has been generated texture object can be bound to target. Then, compressed data may be passed to driver.

Texture parameters are set up for GL_TEXTURE_2D target.

Texture magnification and minification function are set to GL_LINEAR, which correspond to linear filtering. Texture filtering is a process of calculating color fragments from streched or shrunken texture map. Linear filtering works by applying the weighted average of the texels surrounding the texture coordinates. Texture wrapping modes are set to GL_REPEAT, which means that the textures will be repeated across the object.

Once we have got all texture bindings for GL_TEXTURE_2D target we are able to use and switch them at our convenience. It's a job of update_texture_bindings() function.

In each 5 sec texture bindings for texture units are refreshed following block size order from the table in section What is ASTC?

You should see visual output similar to:

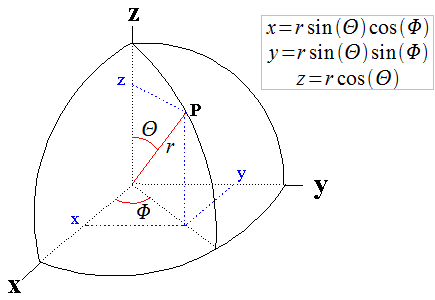

Generating vertex positions, texture coordinates and normal vectors is implemented based on spherical coordinate system. All mesh data are produced by solid sphere constructor.

User is responsible for passing radius, number of rings (parallels) and sectors (meridians) to the constructor. It computes coordinates for each vertex according to formulas:

In such defined model space Φ changes from 0 to 360 degrees and Θ from 0 to 180 degrees. As it is depicted on figure, Θ range has to be divided by the number of rings and the range of Φ by the number of sectors. Smooth appearance of the sphere surface depends on a number of vertices.

For a given vector position P on the sphere who's center is C, the normal is equal to norm(P - C), where norm normalizes the vector. Our sphere center is located at point C(0,0,0), which stands for normal(P - C) = norm(P) = vertices(x,y,z) / radius = (x,y,z). The reason why we generate normal vectors needed for light calculation is introduced in details here Normals

Texture coordinates indicating how to map an image onto mesh primitives are precisely covered in section Load Texture Function

Solid sphere constructor is also responsible for generating indices required for glDrawElements function. It has been assumed that each four vertices form two triangle primitives (GL_TRIANGLES mode), so glDrawElements call needs six vertex indices to construct them:

In the next step generated vertex positions, normals and texture coordinates are loaded into buffer object.

After the above listing has executed, bo_id contains the unique ID of a buffer object that has been initialized to represent buffer_total_size bytes of storage. Passing GL_ARRAY_BUFFER as a binding point to refer to the buffer object suggests to OpenGL ES that we are about to put data in order to feed vertex attributes. Expected usage pattern of the data store is set to GL_STATIC_DRAW which signalizes graphics driver that buffer content will be set once, but used frequently for drawing.

Then, place the mesh data into the buffer object at the corresponding offsets:

Before we can go on, let's create a vertex array object to store vertex array state.

Once it is generated and bound, we are able to fill in its content. The goal is to determine OpenGL ES to connect vertex attributes values directly with the data stored in the supplied buffer object.

Automatic filling of the attributes is enabled with glEnableVertexAttribArray calls. Next, we have to inform graphics driver where the data is and turn on vertex fetching for all attributes. Whereas a non-zero buffer is currently bound to the GL_ARRAY_BUFFER target, last argument in glVertexAttribPointer function specifies an offset in the data store of that buffer.

This sample uses Phong lighting model which has been explained in details in section Lighting

[1] http://www.khronos.org/registry/gles/extensions/KHR/texture_compression_astc_hdr.txt

[2] http://malideveloper.arm.com/develop-for-mali/tools/asset-creation/mali-gpu-texture-compression-tool/

[3] http://malideveloper.arm.com/develop-for-mali/tools/astc-evaluation-codec/